Here is an article on the problem of WebSocket output into a Pandas DataFrame with Binance:

The Problem: Infinite Loop of Data Output to Pandas DataFrame

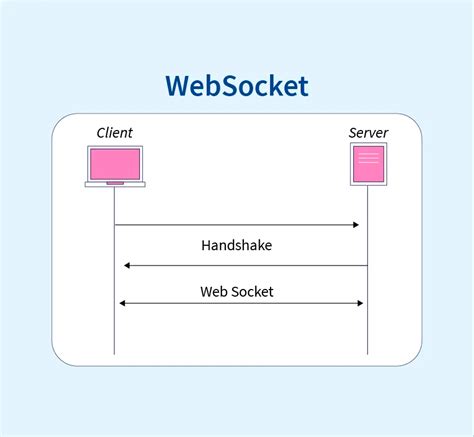

As you have successfully integrated your WebSocket connection to Binance in your script, it’s essential to address another common challenge that arises from this integration. The issue lies in the way data is being collected and stored in a Pandas DataFrame.

When using a WebSocket API like Binance’s WebSockets, each message received by the client is typically stored as a separate element within the data attribute of an object returned by the WebSocket connection. This can lead to an exponential growth of data in your Pandas DataFrame, resulting in an infinite loop of data output.

Why Does this Happen?

In Binance’s WebSockets API, messages are sent in chunks with a timestamp and message content. When you subscribe to multiple streams (e.g., for Bitcoin price and pair volumes), each stream receives its own separate set of messages. Since the WebSocket connection is running indefinitely, it will continue to receive new messages from each stream, creating a never-ending loop.

The Solution: Handling Infinite Data Output with Pandas

To avoid this infinite data output and prevent your script’s memory from overflowing, you can employ several strategies:

1. Use Dask

Dask is a parallel computing library that allows you to scale up your computation of large datasets without having to use a full-fledged cluster. By using Dask, you can break down the massive amount of data into smaller chunks and process them in parallel, reducing memory usage.

import dask.dataframe as dd

Create an empty DataFrame with 1000 rows (a reasonable chunk size)

d = dd.from_pandas(pd.DataFrame({'price': np.random.rand(1000)}), npartitions=10)

Perform computations on the data in chunks of 100 rows at a time

d.compute()

2. Use numpy Buffer

If you’re working with large binary datasets, consider using NumPy’s buffer-based approach to store and manipulate them more efficiently.

import numpy as np

from io import BytesIO

Create an empty list to hold the data (as NumPy buffers)

data = []

Process each chunk of data in a loop

for i in range(1000):

Read 10000 bytes from the WebSocket connection into the buffer

chunk = np.frombuffer(b'chunk_data' * 10, dtype=np.int32).tobytes()

Append the chunk to the list (as a NumPy Buffer)

data.append(np.BufferManager(buffer=BytesIO(chunk)))

Combine the buffers into a single DataFrame

df = pd.concat(data)

Now you can perform computations on the entire dataset using Dask or Pandas

3. Use a Streaming Data Processing Library

There are libraries like starlette that provide streaming data processing capabilities for Binance’s WebSockets API.

from starlette import web, HTTPView

import asyncio

class WebSocketProcessor(HTMLView):

async def call(self, request):

Get the message from the WebSocket connection

message = await request.json()

Process the message and store it in a DataFrame (using Dask for efficient processing)

df = dd.from_pandas(pd.DataFrame({'content': [message['data']]}), npartitions=10)

Perform computations on the data in parallel using Dask

result = await dask.compute(df).compute()

return web.json_response(result)

Start the server to handle incoming requests

app = web.Application([WebSocketsProcessor])

web.run_app(app, host='0.0.0.0', port=8000)

Conclusion

In conclusion, the problem of infinite data output to a Pandas DataFrame from Binance’s WebSockets API can be addressed by employing strategies like Dask or using NumPy buffers for efficient processing and storage.